District-Wide SEL Monitoring Dashboard

Empowering district and school leaders with data

The Situation

District leaders comprise over 80% of Second Step’s purchasers and play an essential role in supporting staff to adopt the program. To help staff and improve program outcomes, leaders rely on program fidelity data to answer key implementation questions.

User Context

Leaders want to see if teachers completed initial setup steps.

Monitoring program fidelity is essential to supporting implementation and evaluating program outcomes.

District SEL Leaders had to download school reports individually, creating a challenge to compare progress across school campuses.

Business Context

District dashboard was the top requested feature we heard from our sales team and in the SWOT analysis.

The product team wanted to position the Second Step program in the market as a district-wide solution. And yet, no district experiences were present in the product.

The organization wanted to deliver this feature in less than 5 months, before the new school year.

Technical Context

The platform was not built to include a district model.

Teachers need to create classes before they can start teaching.

The dashboard would need to accommodate a school and a district leader role.

We lacked essential context data. Where should teachers be? How many classes should they have set up?

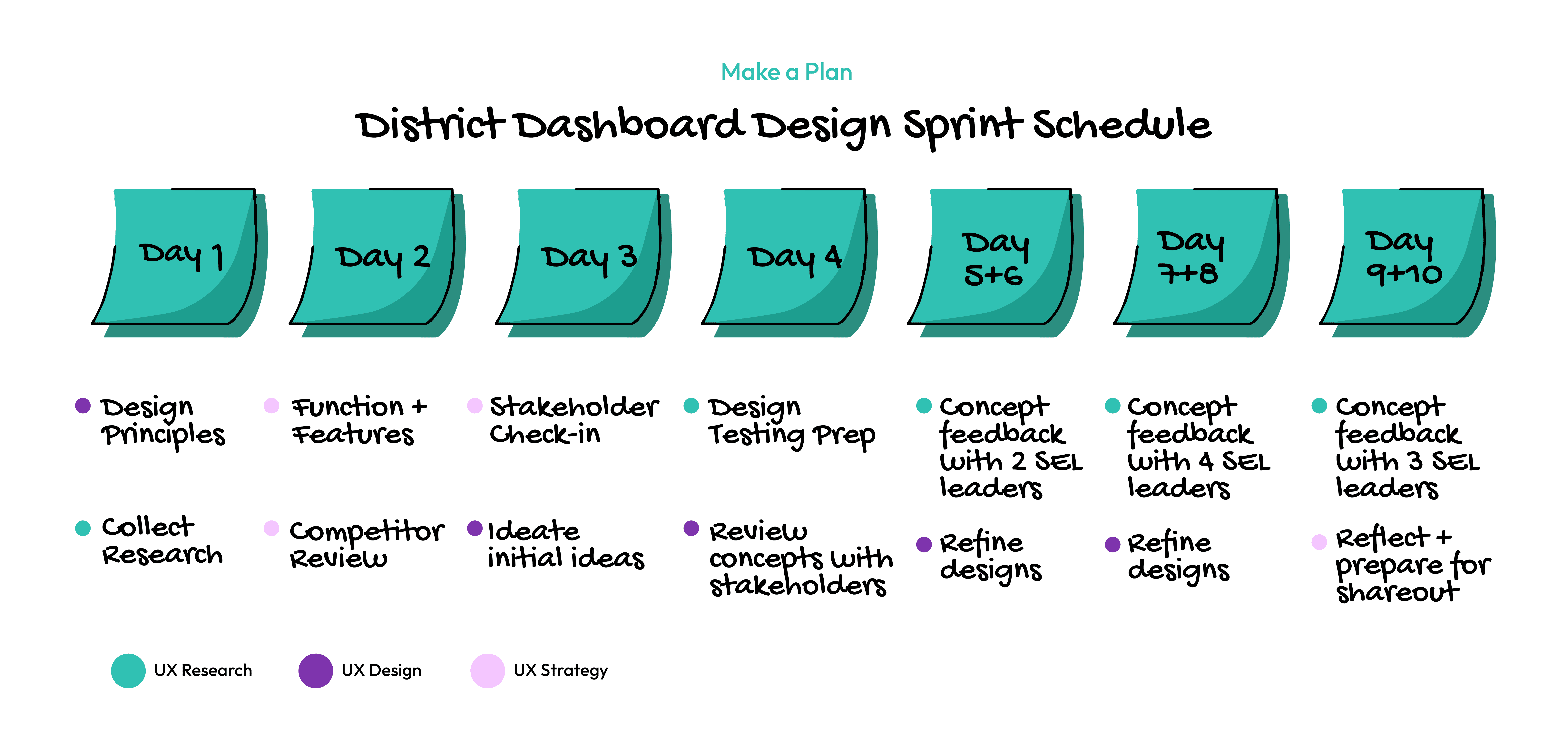

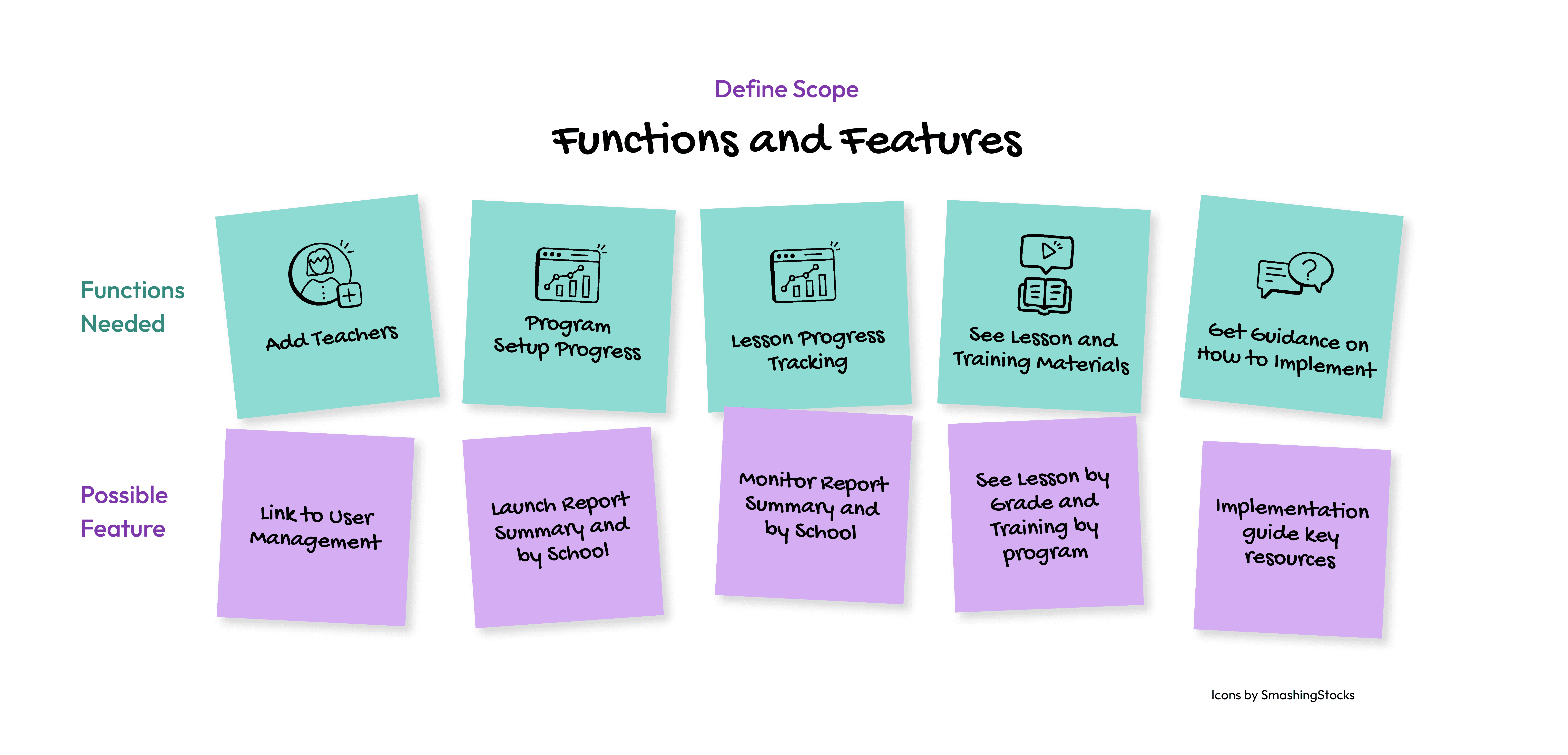

Design Sprint

The goal of the design sprint was to learn about many different data stories and try some early data visualization concepts while directly integrating ideas from SEL leaders into the design process.

Below you'll see the 9 iterations of design and the feedback of different concepts, all in under 10 days.

Key Behavioral Change Analysis

With lots of inspiration from the design sprint, we were ready to find a clear data story and make a hypothesis where outcomes for change can be most impactful, and for whom.

The next step was to align with a cross-functional team to integrate the learnings from district leaders into desired business and impact outcomes.

The Result

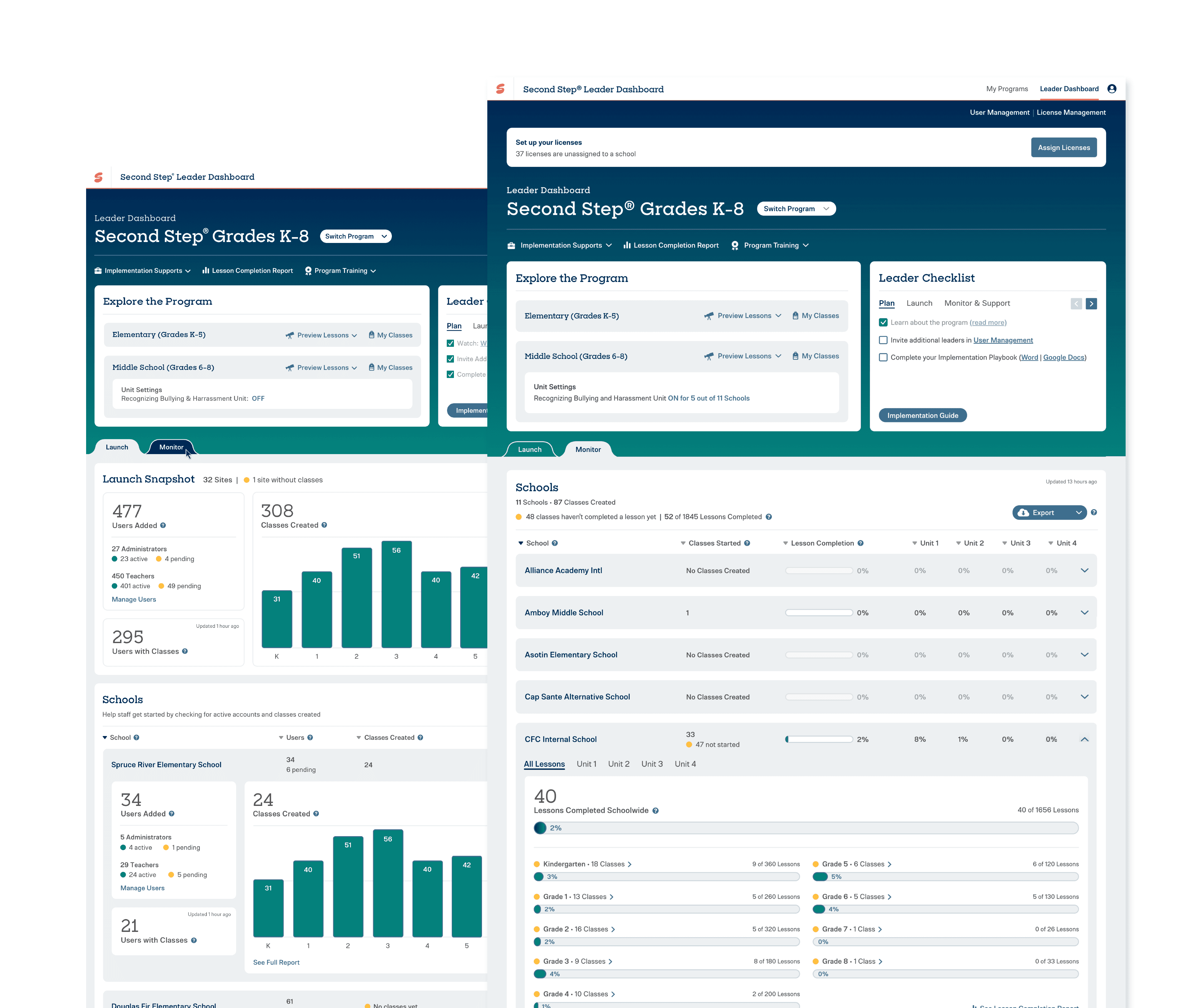

After a data design exploration and usability testing, we arrived at a flexible leader dashboard that accommodated the needed functions and features. To meet the behavior change goal, we created a scannable table to analyze the schools that need support with an at-a-glance indicator.

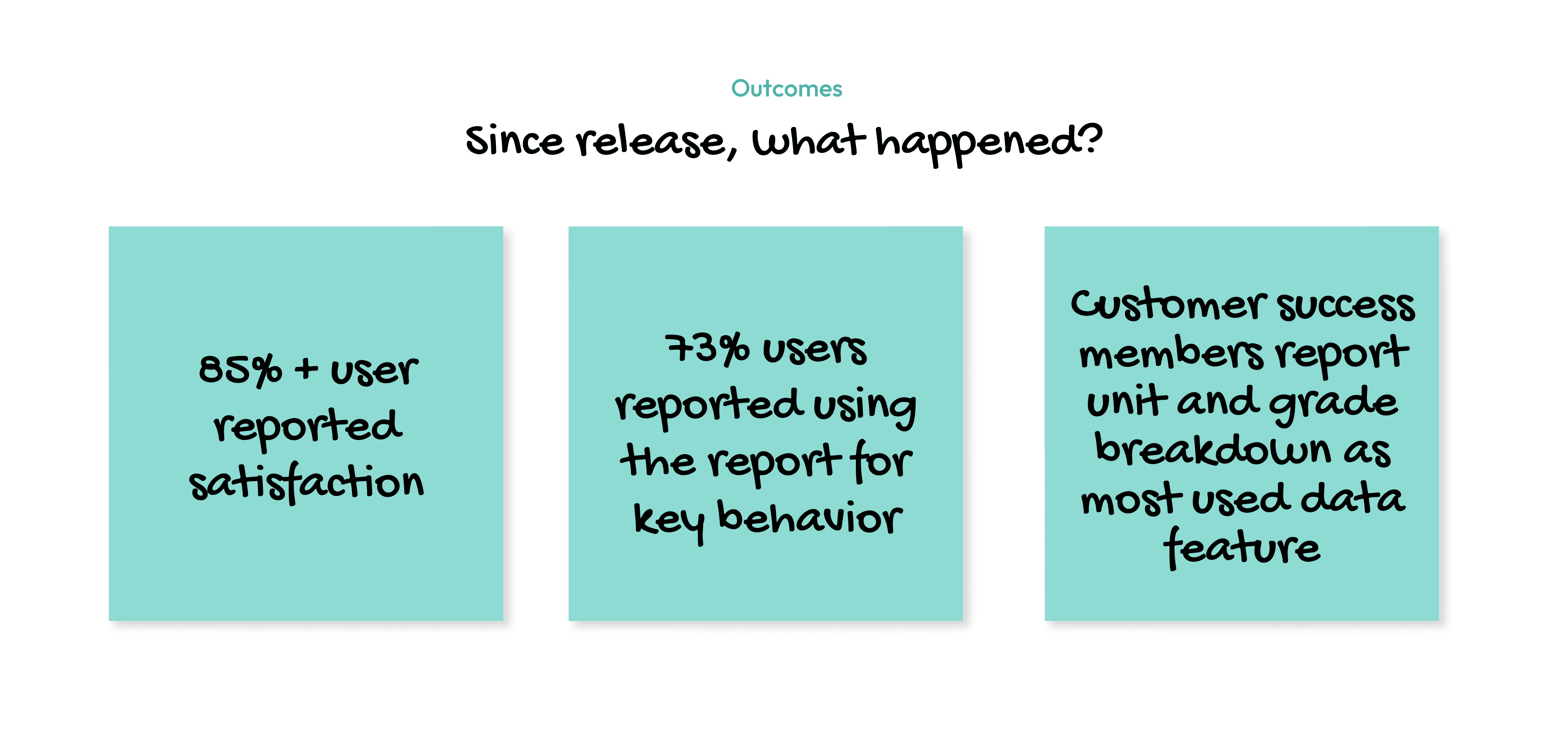

2 of the 3 product goals were met

MET High customer satisfaction with usability

MET Data is being used to take action and to support program impact

UNMET Leader login rates stayed consistent and did not increase

High customer satisfaction, 250 users were surveyed:

85% agreed that it is "easy to understand the lesson progress data"

89% agreed that they can "use the lesson progress information to support staff"

4.5 average rating that it was "easy to collect information for end-of-year implementation reports"

93% agreed that they used the new lesson completion reports to determine whether they "met their lesson completion goals"

73% of participants said they use that data to take action (this was the key behavior goal)

Internal team satisfaction:

The sales and support teams are happy too! The sales team is closing district purchases much easier with the demo of this suite of implementation monitoring tools.

The customer success team reported that the data export and the unit breakdowns made supporting district leaders with their data impactful and strategic.

In reflection

Given the challenge of displaying data without the context of where the schools should be, we did meet the behavior goals of the project. This was the most challenging part of the data design.

When we have school settings and pacing data, our reporting capabilities will have the chance to be more dynamic.

Due to the school year schedule and scope, we had to do a rolling release of features. This made it hard to see the whole design experience and some features went live mid-year, which may have lost leaders in adopting the feature. There are small adjustments that will support a more coherent experience across school and district leaders using their data.